Museum Semantic Search

https://museum-semantic-search.vercel.app

https://github.com/derekphilipau/museum-semantic-search

Proof-of-concept for searching museum collections using AI embeddings and AI-generated visual descriptions. Cross-modal search capabilities via SigLIP 2 and text search via Jina v3. Deployed on Vercel and Modal for GPU inference.

This project is meant as a starting point for experimentation and discussion around the use of AI in museum collections search, and should not be taken as advocating a specific approach.

Visual Descriptions

Gemini 2.5 Flash was used to generate three types of visual descriptions: alt text, long description, and emoji summary. The prompt was inspired by Cooper Hewitt Guidelines for Image Description.

The system uses a two-pass approach:

Generation Pass: Multimodal Gemini 2.5 Flash with image and metadata input. Creates initial descriptions following accessibility guidelines. Full prompt here.

Editorial Pass: Prompts Gemini 2.5 Flash again, this time only with the initial text outputs and the guidelines. Refines descriptions for consistency, removes biases, and ensures strict adherence to museum standards including objectivity, consistent word counts, and appropriate emoji selection. Full prompt here.

Personal Conclusions:

In practice, the initial generation often deviates from the guidelines, and often contains cultural assumptions, interpretations, or subjective language. The secondary editorial pass is very helpful in ensuring high-quality, objective descriptions that strictly adhere to the Cooper Hewitt Guidelines.

When combined with text embeddings search, visual descriptions significantly improve search relevance for queries in comparison to basic metadata keyword searches. E.g. "woman holding a fan", "man fighting a monster"*, etc. See "Mining Archetypes" below for more examples.

Visual Descriptions Example: The Death of Socrates, Jacques Louis David, 1787

The Death of Socrates, Jacques Louis David, 1787

Generation Pass, Initial Visual Descriptions Multimodal Gemini 2.5 Flash output:

Alt Text: "Socrates, surrounded by grieving students, reaches for a cup of hemlock in a dimly lit prison cell"

Long Description: "The painting depicts a dramatic scene within a dimly lit, stone-walled room. In the center, an elderly, muscular man with a white beard, identified as Socrates, sits upright on a bed, draped in a white cloth. He gestures upwards with his right hand, as if speaking, while his left hand reaches for a small, red cup offered by a younger, muscular man in a red tunic, who turns away with a pained expression. To the right of Socrates, several men are gathered, showing various states of distress. One man in a red robe sits on a stone block, looking down thoughtfully. Behind him, others express anguish, some covering their faces, others raising their hands in despair. To the left of Socrates, an older man with a white beard and gray robe sits slumped on a bench, his head bowed in sorrow. Further back, in a darker arched doorway, more figures are visible, including a woman being led away. On the floor in the foreground, near the slumped man, are two rolled scrolls and a broken chain. The room's architecture is simple, with stone blocks forming the walls and an arched opening leading to a darker area. A small, dark object hangs from the ceiling. The overall lighting is dim, with a subtle glow on the central figures."

Emojis: 🧔🍷😭⛓️📜

Editorial Pass, Gemini 2.5 Flash Editorially Revised Output:

Alt Text: “An elderly man reaches for a cup, surrounded by distressed figures in a dimly lit stone room"

Long Description: "A dimly lit, stone-walled room contains multiple figures. In the center, an elderly, muscular man with a white beard sits upright on a bed, draped in a white cloth. He gestures upwards with his right hand, while his left hand reaches for a small, red cup. A younger, muscular man in a red tunic offers the cup, turning his head away from the elderly man with a downturned mouth. To the right of the central elderly man, several men are gathered, displaying varied postures. One man in a red robe sits on a stone block, looking downwards. Behind him, other figures cover their faces or raise their hands. To the left of the central elderly man, an older man with a white beard and gray robe sits slumped on a bench, his head bowed. Further back, in a darker arched doorway, more figures are visible, including a woman standing near another figure. On the floor in the foreground, near the slumped man, are two rolled scrolls and a broken chain. The room features stone block walls and an arched opening leading to a darker area. A small, dark object hangs from the ceiling. The overall lighting is dim, with a subtle glow on the central figures."

Emojis: 🧔🍷👥⛓️📜

Editorial Changes Made:

Alt Text: Removed specific name "Socrates."

Alt Text: Removed interpretive terms "grieving students," "hemlock," and "prison cell."

Alt Text: Replaced with objective visual descriptions like "distressed figures" and "stone room."

Alt Text: Adjusted word count to be closer to 15 words.

Long Description: Removed subjective phrase "The painting depicts a dramatic scene."

Long Description: Removed specific name "Socrates" and the phrase "identified as Socrates."

Long Description: Removed interpretive phrases such as "as if speaking," "pained expression," "various states of distress," "looking down thoughtfully," "express anguish," "raising their hands in despair," and "bowed in sorrow."

Long Description: Replaced character-specific references like "To the right of Socrates" with neutral spatial references like "To the right of the central elderly man."

Long Description: Rephrased "a woman being led away" to "a woman standing near another figure" to remove implied action/intent.

Long Description: Removed subjective judgment "The room's architecture is simple."

Long Description: Replaced emotional descriptions of figures with objective descriptions of their postures and expressions (e.g., "downturned mouth," "displaying varied postures," "cover their faces").

Emoji Summary: Removed "😭" emoji as it represents an emotion, which is explicitly forbidden.

Emoji Summary: Added "👥" emoji to represent the group of multiple figures, ensuring all main visual elements are covered objectively.

Search Comparison

Despite sometimes questionable results, text embedding search with AI-Generated visual descriptions seems to work well in practice. Image embedding search also shows promise, although it seems less reliable and often produces strange results.

Below are comparisons of keyword search, text embedding search, and image embedding search for the query "woman looking into mirror".

Search for "woman looking into a mirror"

Out of a result set of 20:

The conventional Elasticsearch keyword search over Met Museum metadata produces only 3 results that I consider highly relevant.

Text embedding search using Jina v3 embeddings on combined metadata and AI-generated descriptions returns 13 excellent results, including a number of images where the reflection or mirror is not even visible.

Image embedding search using SigLIP 2 cross-modal embeddings returns 8 highly-relevant results, including artworks where there's no actual mirror, but rather the concept of mirroring, for example "Portrait of a Woman with a Man at a Casement" by Fra Filippo Lippi and "Dancers, Pink and Green" by Edgar Degas.

Results that I found exciting are highlighted in the image below. A number of these I probably would have missed if browsing through images.

Text embeddings search result for "woman looking into mirror".

Difficult to see: the woman on the left is looking into a mirror.

Portrait of a Woman with a Man at a Casement by Fra Filippo Lippi

Image embeddings search result for "woman looking into mirror".

Perhaps the woman is not looking into a mirror, but it does feel like a mirroring.

Mining Archetypes

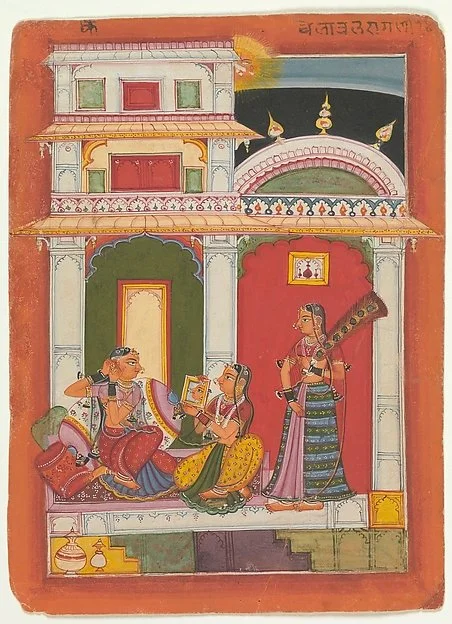

Intrigued by the results for "woman looking into mirror", I started to search for other art history archetypes to explore across cultures & time. Although the embedding searches are often inaccurate, interspersed are some surprisingly relevant results that would not have been possible with keyword search alone.

Curated Search Results for Various Archetypes

Searches:

AI-Generated Emojis

Sometimes strangely accurate revealing details I missed, at other times questionable and problematic, and often hilarious. Dubious practical use but fun.

Visualize Embeddings

The /visualize page lets you explore how artworks cluster in the embedding space. You can see the entire collection as dots on a 2D map, where similar artworks appear near each other. Uses UMAP to reduce the 768-dimensional embeddings down to 2D while preserving relationships.

Screenshot of Visualize UI

Each dot represents one artwork in the collection

Distance between dots shows semantic similarity, closer dots are more similar

Search to highlight relevant results. Larger, brighter dots rank higher

Color dots by artist, period, or tags to reveal patterns

The map visualizes patterns in the text embeddings, grouping artworks into clusters based on shared themes, styles, and subjects. For example, "Portraits of Men" and "Portraits of Women" are clustered near each other, as are "Horses" and "Men on Horses". Distinct traditions like "Ancient Indian Manuscripts" form their own separate regions. Some clusters represent very specific subjects, like bamboo, dragons, and tigers. This 2D projection is just a simplified view, showing only a fraction of the more complex relationships that exist in the higher-dimensional embeddings space.

Example Journeys

Try these search progressions to see how the embedding space organizes concepts:

"landscape" > "mountain" > "snowy mountain": watch the cluster narrow

"woman" > "woman smiling" > "woman with hat": see portraits organize by attributes

"blue" > "blue sky" > "stormy sky": explore how color and mood interact

Examples of various search term clusters

AI Curated Similarity (Pre-computed LLM Reranking)

The AI curation process:

Retrieves top 20 candidates from metadata and text embeddings searches, 5 candidates from image embeddings search

Removes duplicates and presents candidates without scores to avoid bias

Applies art historical expertise to select truly meaningful connections

Enforces diversity rules (max 3 per artist, max 8 per similarity type)

Returns up to 20 curated recommendations with confidence scores

Uses Gemini 2.5 Flash to intelligently select and rank similar artworks:

Cross-cultural connections: Discovers relationships across time periods and cultures (e.g., Gauguin's Tahitian Madonna with Renaissance Madonnas)

Thematic relationships: Identifies shared subjects and motifs beyond surface similarities

Visual intelligence: Considers composition, style, and emotional resonance

Diversity-aware: Limits over-representation of single artists or similarity types

Explainable: Each recommendation includes a brief explanation of the connection

AI-Curated Similarity Example

See Example here: Holy Family with Saint Anne, French Painter (17th century)

For this example, relying only on metadata, the keyword search does a poor job of finding relevant similar artworks, pulling in various works by unknown "French Painter". Text & image embeddings results are better, especially with theme and style. The AI-curated results are perhaps best in my opinion, but I'm not an art historian and not familiar enough with the collection to make an educated judgment.

Diagram of AI-Curated Similarity Process

Related

Musefully (website, github): Search across museums using Elasticsearch and Next.js

“Accessible Art Tags” GPT: a specialized GPT that generates alt text and long descriptions following Cooper Hewitt Guidelines for Image Description.

OpenAI CLIP Embedding Similarity: Examples of OpenAI CLIP Embeddings artwork similarity search.

Related Projects

MuseRAG++: A Deep Retrieval-Augmented Generation Framework for Semantic Interaction and Multi-Modal Reasoning in Virtual Museums: RAG-powered museum chatbot

National Museum of Norway Semantic Collection Search (Website, Article): Search via embeddings of GPT-4 Vision image descriptions.

Semantic Art Search (Github, Website): Explore art through meaning-driven search

Sketchy Collections (Github, Website): CLIP-based image search tool that lets you explore artworks by drawing or uploading a picture